Where did it come from? Digital computing

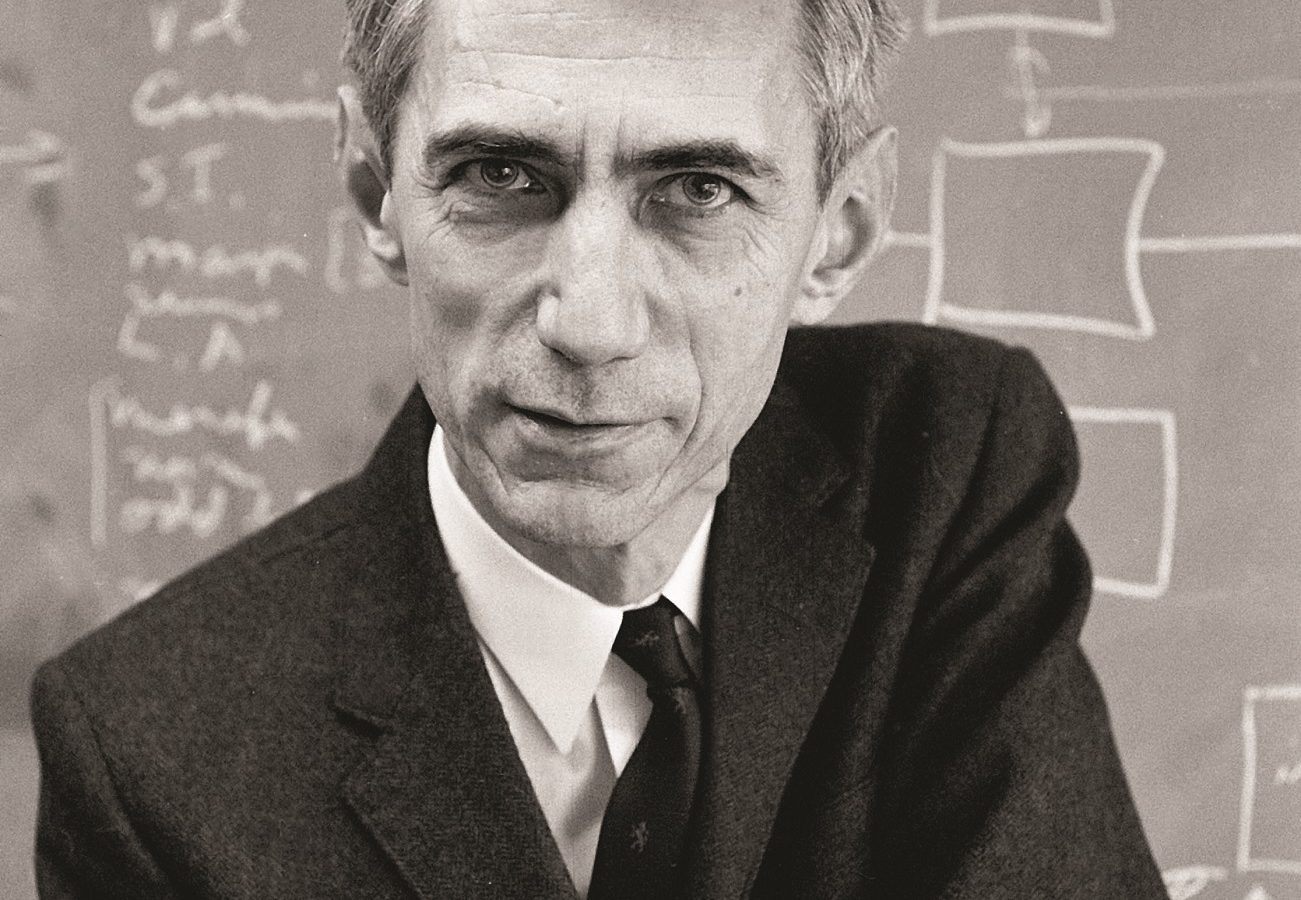

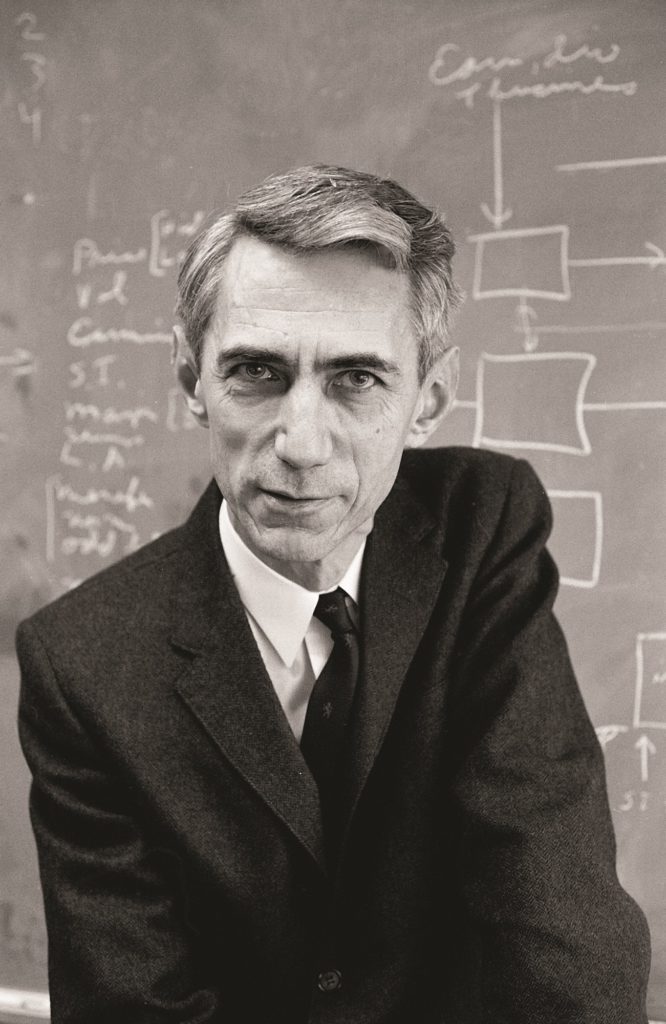

Early computers were room-sized mechanical brains complete with wheels, shafts, and cranks. Then the playful and ingenious Claude Shannon came along.

Take a self-guided tour from quantum to cosmos!

Early computers were room-sized mechanical brains complete with wheels, shafts, and cranks. Then the playful and ingenious Claude Shannon came along.

If you look for the origin of our information age, you could be looking a long time. Information and the machines that process it surround us like the weather, and it’s hard to predict where this wind will blow next or trace that storm back to where it started. But there are a handful of people who are lightning strikes: brilliant, transformative, and singular. One of them was Claude Shannon.

As a boy, Shannon was a tinkerer. Growing up in tiny Gaylord, Michigan, in the 1920s, he built an elevator in a barn and turned a barbed wire fence into a secret telegraph exchange. All his life he would think like this: practically and with his hands, building chess-playing computers and maze-solving mechanical mice.

Unsurprisingly, given that he was someone who would later learn to juggle while riding a unicycle, Shannon declined to settle on a single course of study. Instead, he took a dual degree in mathematics and engineering. It was not part of a grand design, he would say later. It was only that he didn’t know which he liked best, the math or the machines.

He finished his undergraduate degree in 1936, deep in the Great Depression, and the family furniture and coffin-making business attempted to draw him home. But fortunately for history, he spotted a postcard tacked to a bulletin board in the engineering department office, looking for assistants to work on “a mechanical brain” at MIT.

“I pushed hard for that job, and I got it,” he would say later. “It was one of the luckiest things of my life.”

At MIT, Shannon was put to work on (and in) the mechanical brain, a room-sized contraption invented and overseen by the famous Vannevar Bush. Called the differential analyzer, it was a computer – but not the kind we know now. When Bush taught students about it, he’d start with the idea of Newton’s apple plummeting from its tree. If the apple were in a vacuum, it would simply be accelerating at a constant rate and its speed at any second could be calculated with a single, simple equation, in seconds. But in fact, the apple is falling through air, which creates a drag that grows as the apple speeds up. The actual speed of the apple, then, is governed by two interlinked equations. Add a tumble that changes the drag and there are even more equations to consider. In the real world, even a simple problem like a falling apple can rapidly become too cumbersome for chalkboards.

Better, said Bush, to automate those calculations. To do that, one could build a machine. Perhaps one turning shaft could represent the acceleration due to gravity and another could represent the drag from the air. These could be connected to wheels and gears that would, both literally and

mathematically, integrate the two accelerations into one.

The machine embodied the problem, in that it obeyed the same set of differential equations as the falling apple. This analogous behaviour is what we refer to when we say the early computers were analog.

Analog computing was a successful approach: the differential analyzer was used to bring complex problems like electron scattering and power-grid load into the reach of calculations for the first time. But by the time Shannon arrived, the machine had hit a wall: it had to be rebuilt every time the problem changed, and that rebuilding was taking too long.

Most of the brain work, Shannon would quickly learn, went into changing the configuration of a box full of 100 switches, which in turn changed which shafts were spinning and how they were geared together. The configuration of those switches was done by trial and error – mostly error. Any modern engineer would recognize that laying out switches and the connections between them is the essence of circuit design, but in those days, circuit design was more art than science.

Shannon made it into science. In fact, he made it into math.

In his undergraduate days, Shannon had come across the work of 19th century thinker George Boole, who developed a system for figuring out whether logical statements were true or false. Boole took the arguments of Aristotelian logic (of the “all men are mortal; Socrates is a man; therefore Socrates is mortal” flavour) and created an algebraic system for evaluating them. He let one and zero stand for true and false, and introduced the operators AND, OR, NOT, and IF, by now familiar to anyone who’s ever written a single line of code. Boolean algebra put pure logic firmly in the realm of pure mathematics.

Ninety years passed, during which no one did anything much with Boolean algebra. But as Shannon struggled to make sense of which switches should be thrown to reconfigure Bush’s computer, something twigged. Later, he tried to put his finger on what. It wasn’t quite that the open/close of a switch could also be a Boolean zero/one, though that was part of it. It was more, he told a journalist, the realization that two switches in a series were equivalent to AND, because they both had to be closed to let current flow through, and two switches in parallel were equivalent to OR, because current would flow if either or both were closed. He realized that the design of circuits could be governed not by trial and error, but by the rules of Boolean algebra. Vannevar Bush’s great machine could be rebuilt – reprogrammed – with a bit of thought and a pen and paper, almost on the fly.

What’s more, once switches were reduced to ones and zeros, switches themselves left the picture. Anything could take their place, and soon would, starting with vacuum tubes, then transistors. Computer technology leapt forward. The year was 1937 and Shannon was 21 years old.

There was probably no one else in the world who could have made Shannon’s breakthrough. He was the only man in a room full of engineers puzzling over switches who knew about the mathematics of formal logic. And he was also probably the only man with a background in formal logic who ever had to engineer a system of switches.

He wasn’t done contributing to computer science, not nearly. During the war, he worked on cryptography, but moonlighted on a secret project of his own. Afterwards, he was hired at Bell Labs, that hothouse of scientific and technical invention that gave the world the transistor, the laser, and the solar cell. At Bell Labs, he was a genius among geniuses, cruising the narrow hallways on his unicycle. Even so, when he finally revealed the results of his secret project, it came as a dazzling bolt from the blue.

The bolt took the form of a 1948 paper called “A Mathematical Theory of Communication.” Scientific American would one day call it “the Magna Carta of information theory”: the document that founded an entire field. Among other things, it gave us the binary digit – the bit – and the idea that any piece of information could be encoded as the zeros and ones that Shannon had assigned to those switches. When we say today’s computers are digital, the zeros and ones are the digits we have in mind. If we live in a digital age, then this is the moment it started.

Claude Shannon was in love with the deep particulars of the world. He would master them only to leap beyond them into a realm of pure abstraction, and then translate that back into machines. He would master juggling, then develop the first mathematical theory of juggling, then build a juggling robot. He played chess, redefined the mathematics of chess, and then wrote the defining paper on programming computers to play chess. He was silly: he once built a flame-throwing trumpet and invented a computer that did calculations using Roman numerals. He never sought publicity and rarely sought collaboration. He was very kind and very shy. Inside information theory, he is a god. Outside it, he is nearly forgotten.

The father of information theory died of complications from Alzheimer’s in 2001.

In his latest book, UK Astronomer Royal Sir Martin Rees explores how humanity’s rapid technological advances are threatening – and may ultimately vouchsafe – our survival as a species. He sat down with Inside the Perimeter to discuss why he believes science provides our clearest glimpse into the future.

Somewhat less prestigious than the Nobel Prize, but much more hilarious, the Ig Nobel Prize annually lauds research worth commemorating for its weirdness.

Add a little scientific wonder to your smartphone, tablet, or computer with these thought-provoking wallpapers.