Artificial Intelligence: Can It be Trusted?

TRuST panel discusses the benefits and perils of AI in a fast-changing world

Two extremes dominate the debate over the development of artificial intelligence (AI).

On one side, so-called “doomers” worry that unleashing machines and bots that can learn might lead to a catastrophe. On the other side, accelerationists, or “zoomers,” want to put the pedal to the metal and develop AI as quickly and as fully as possible. They envision a rosy techno-future, with AI working alongside human scientists to find cures to diseases, for example, or new technological solutions to climate change.

So which camp is right? Can we trust AI to shape the future? What are the perils and promises? Should AI be regulated? If so, how can the regulations be made to protect the most vulnerable without hindering beneficial innovation?

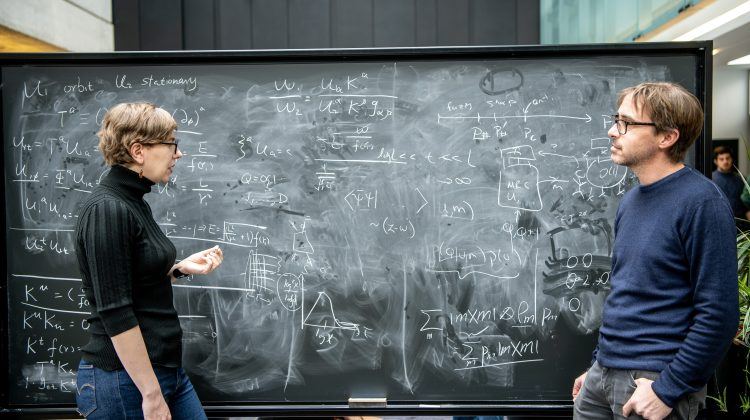

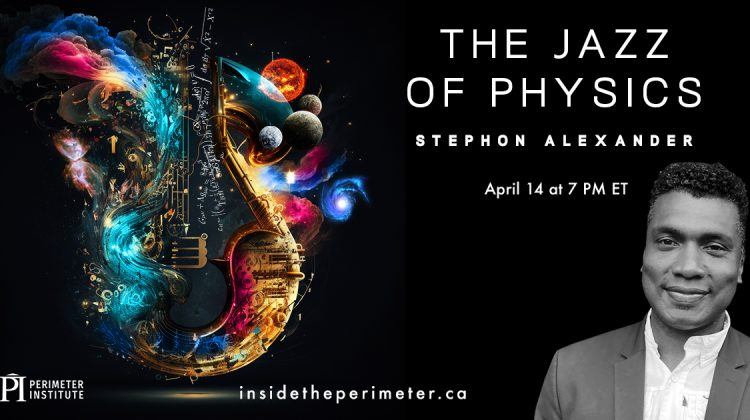

Perimeter Institute recently partnered with the University of Waterloo (UW) and the Trust in Research Undertaken in Science and Technology (TRuST) Scholarly Network to host a panel discussion addressing these questions.

“One of the Network’s key pillars is to engage with and listen to community members to better understand why people do or don’t trust scientific and technical information. This is a natural area of partnership for Perimeter Institute and the University of Waterloo, two institutes that already share a close connection,” said Emily Petroff, Associate Director, Strategic Partnerships, Grants, and Awards at Perimeter, in introducing the event.

The panel included a cast of extraordinary experts: Makhan Virdi, a researcher at NASA’s Langley Research Center; Leah Morris, senior director of the Velocity Program at Radical Ventures; Anindya Sen, economics professor at UW and associate director of the Waterloo Cybersecurity and Privacy Institute; and Lai-Tze Fan, Canada Research Chair in Technology and Social Change at UW. Moderating the discussion was Jenn Smith, engineering director and WAT Site co-lead at Google Canada.

The panel was introduced by the TRuST co-leads Ashley Rose Mehlenbacher, Canada Research Chair in Science, Health, and Technology Communication at UW, and Donna Strickland, a UW professor and Nobel Prize winner, who is also on Perimeter’s board of directors.

Wide-ranging in scope, the event saw panellists grapple with difficult, intractable problems regarding the future of AI.

Virdi, the NASA researcher, for example, described the search for a middle path between AI’s real dangers and its real benefits: a challenging course to chart amidst the whirlwind of hyperbole that AI often attracts.

“I think the reality is somewhere in between these two extremes,” Virdi said.

AI clearly has plenty of beneficial use cases. But it doesn’t serve anyone’s interest to be careless.

AI does not need to advance to human-level intelligence in order to generate problems, Virdi said. It can still trick us “if it can pretend to be human, and be convincing enough in terms of the language, the content, the structure, and the texture.” In that sense, he said we “are already there” in terms of the need for regulations. At the bare minimum, it should be a requirement that AI be transparent about what it is and what it is doing, he added.

Plenty more was covered in the course of the panel: how to deal with ‘bad actors’ using AI, understanding who AI will serve and who it might harm, and asking all-important questions about privacy, data security, and intellectual property in the AI ecosystem.

Watch the full, fascinating public discussion on Perimeter’s YouTube channel: https://youtu.be/FBAE3E4PSgo?si=nIjnj26nyV27etyr