“We are on the cusp of a revolution”

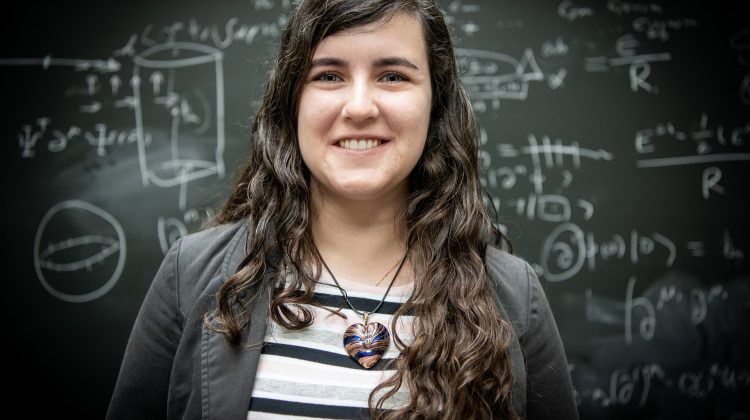

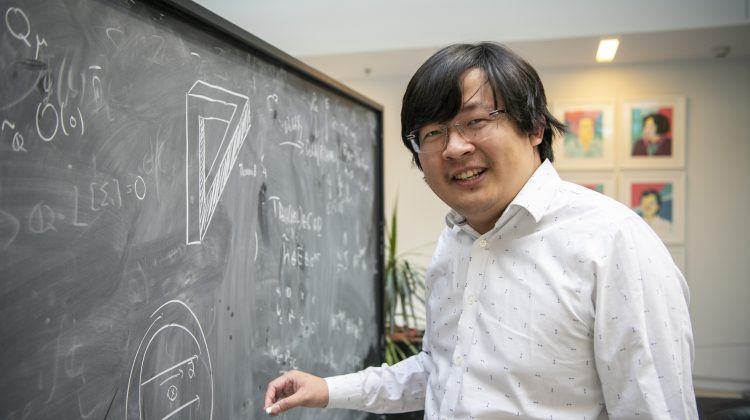

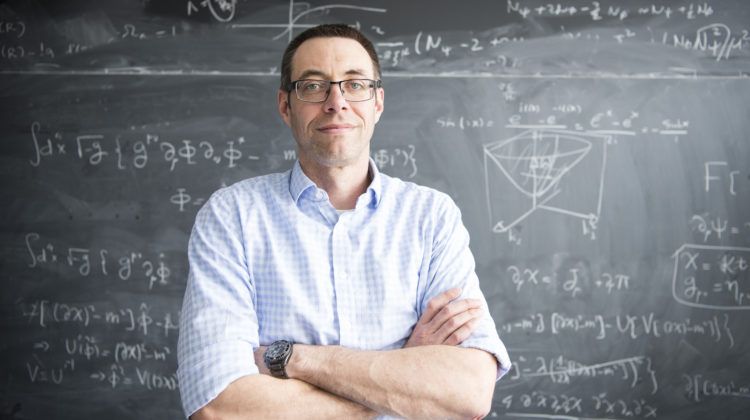

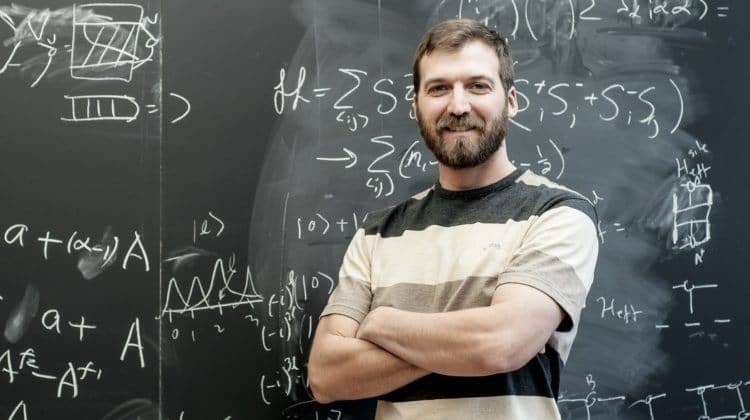

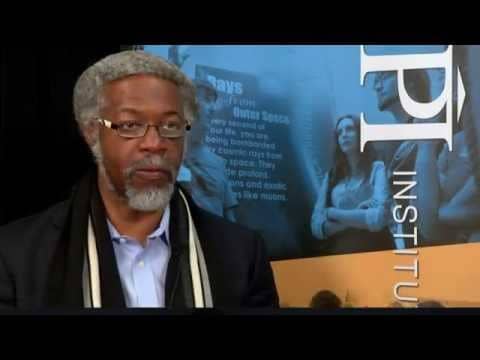

Perimeter Associate Faculty member Roger Melko says large language models used in chatbots will advance the abilities of large-scale quantum computer simulations.

When ChatGPT was launched on November 30, 2022, it took the world by surprise.

Less than a decade ago, many experts predicted that language was not something computers could ever master. But here was a conversational artificial intelligence (AI) chatbot that could – with simple prompts – generate poetry, a short essay, or dialogue.

Within two months, ChatGPT had 100 million users. Now, one can hardly turn around without hearing something in the news about it or similar AI deep learning algorithms known as large language models (LLMs), such as Google’s Bard.

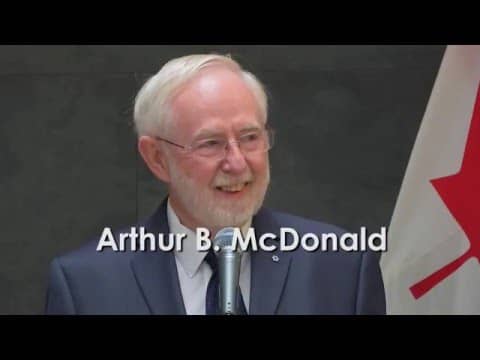

But what most people don’t know is that by the time ChatGPT was launched, researchers at the Perimeter Institute Quantum Intelligence Lab (PIQuIL) and Vector Institute for Artificial Intelligence were already working hard on adapting language models for use in quantum computing.

Roger Melko, a Perimeter associate faculty member and the founder of PIQuIL, and Juan Carrasquilla, a researcher at Vector who recently moved to ETH Zurich, have now published a perspective paper in Nature Computational Science describing how the same algorithmic structure in LLMs is being used to advance quantum computing.

Researchers in their groups, along with many other labs across Canada and in other countries, are already very much involved in adapting large language model algorithms to quantum computing.

“Since 2018, our groups have been pushing a lot of the fundamental science behind language models,” says Melko, who is also an affiliate at Vector and a professor at the University of Waterloo. “Now, we have a pretty good roadmap for the technology, so we are ready to attempt the second phase, the scaling phase.”

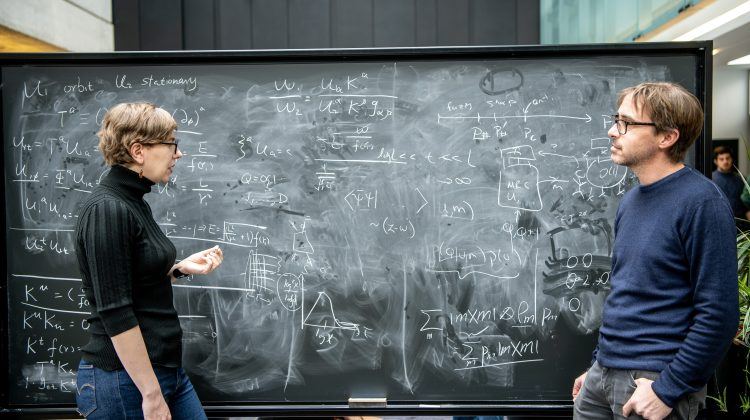

Carrasquilla began studying and applying machine learning concepts when he was a postdoctoral researcher at Perimeter, working with Melko and other colleagues.

“It was an inspiring time at Perimeter,” he says, adding that Melko provided “complete freedom and unconditional support.” After that, Carrasquilla worked at the quantum annealing company D-Wave in British Columbia. “I was exposed to a wide array of machine learning models including powerful language models based on recurrent neural networks,” he says.

While at Vector Institute, which is part of a pan-Canadian AI research strategy, he worked with Melko and other collaborators on employing large language models to learn quantum states.

A key task for these AI models will be to help scale quantum computers in turn. If quantum computers can be scaled up to operate with fewer errors and many more qubits, they could be used to do many types of simulations – to build new quantum materials for clean energy solutions, for example, or for drug discovery.

Language models that can be used to predict the behaviour of future quantum computers can be trained, either fully or in part, by real data from today’s modest quantum devices.

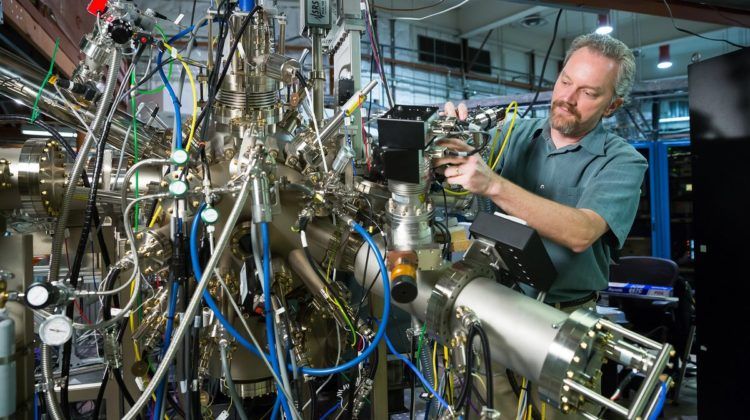

The biggest challenge is obtaining suitable datasets comprised of qubit measurement outcomes that are needed for training the architecture of the AI models, Melko adds. “We are establishing relationships with quantum computing experts at universities and private companies, to build that data pipeline,” he says.

But how could large language models for chatbots like ChatGPT, which are all about generating words, have anything to do quantum computing simulations using quantum bits (qubits)?

As Melko explains, this has to do with the way LLMs work, which is by making predictions. “The basic job of a language model is to take what’s called a prompt and complete the text,” he says. “When it has 9 words, it will predict the 10th word based on the previous 9. And then the next word based on the previous 10.”

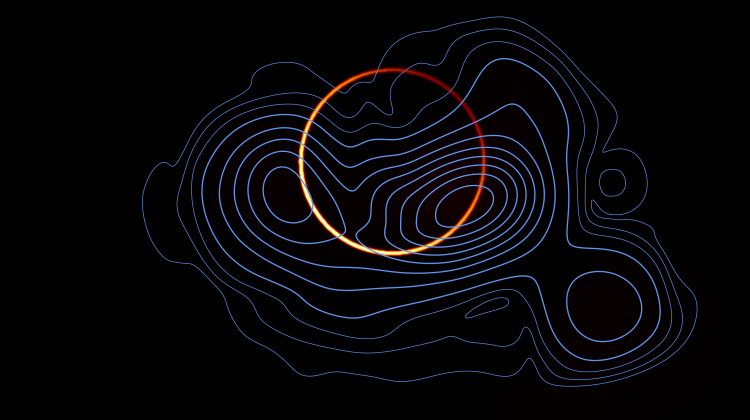

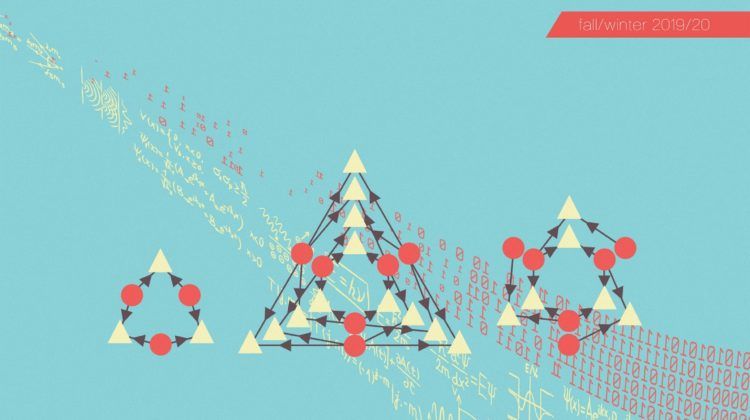

Quantum computing is also about predictions, he adds. In quantum computing, instead of words, researchers want to predict qubit measurement outcomes based on the probabilities of what the qubits will do next.

“When you start performing measurements, one at a time, on qubits 1, 2 and 3, and predict the measurements for qubits 4, 5 and 6, and so on — if you collect enough of those measurements, you have what is called a wave function,” Melko says.

A wave function is a mathematical description of a quantum state of a particle as a function of momentum, time, position, and spin. This can be used to calculate and predict the results of experiments conducted on subatomic particles and to perform quantum computer simulations.

Quantum computing utilizes the strange quantum mechanical properties of atoms, such as superposition and quantum entanglement, to do computing.

In a regular laptop, there are on-off electrical switches that generate strings of ones and zeros or “bits” that are the foundation of binary code. But a quantum computer will instead use the quantum mechanical states (such as a particle in a superposition of both spin up and spin down) to generate quantum bits or qubits. This is what allows for much richer, deeper computations than are possible with a regular computer.

There are already quantum computing systems operating in labs around the world. But the big challenge is scaling them up to do quantum simulations that might involve thousands of qubits, or millions.

Qubits are very prone to errors that are caused by any “noise” in the system, such as thermal fluctuations, electromagnetic interference, imperfections in quantum gates, and interactions with the environment. It is possible to correct errors using quantum error correction codes, but the more qubits, the more error-prone the system is, which is why it is hard to scale up quantum computers.

Melko says quantum computers using LLMs would be much more scalable: “I believe the large language models could be the future of quantum error correction because these are architectures that have already demonstrated scaling.”

In recent years, the pioneering work done by Melko with his graduate students have demonstrated the potential of language models in quantum error correction, Carrasquilla adds.

“Language models can address a diverse range of challenges that quantum computing aims to solve. Therefore, this research can provide a strong baseline for quantum computers to surpass and demonstrate practical advantage,” Carrasquilla says. These models can also be used to benchmark the preparation of quantum states in quantum computing, which is key to the development of quantum devices, he adds.

Conversational chatbots get trained on massive amounts of data that can be obtained by scraping the internet. It’s harder to get the massive amount of data on qubit operations to train quantum computers, but that is exactly the data pipeline that researchers around the world are now building, Melko adds.

One of the problems encountered with conversational chatbots like ChatGPT is that they can “hallucinate,” or generate answers that are completely off-base. But Melko believes that in a quantum computing language that is based on the large language models, it would be easier to start with good data and it won’t be so prone to hallucinations.

“It is all about where your data comes from. Who is curating it? What kinds of biases does your data have? All of this is being baked into tomorrow’s quantum computers today,” Melko says.

Thanks to AI technology, Melko predicts there could be a “massive acceleration” in quantum computing technology.

“We are certainly not the only game in town, as big players like Google have already demonstrated the ability of AI to perform quantum error correction using language models. But what I think is amazing is that we are competitive. Canada is a hotbed for innovation in AI and quantum computing,” Melko says.

The technologies will also become intertwined so that one breakthrough will lead to another. Just recently, Google announced in Nature that its DeepMind AI tool called AlphaGeometry can rigorously prove whether geometric facts are true, just as well as competitors in the International Mathematical Olympiad.

“All of these technologies will be combined into a larger AI strategy in science and mathematics,” Melko says. “We are really at the precipice. We are on the cusp of a revolution, and we are seeing it play out in real time.”